DiffH2O: Diffusion-Based Synthesis of Hand-Object Interactions from Textual Descriptions

SessionHand and Human

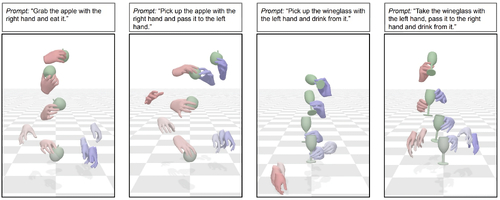

DescriptionWe introduce DiffH2O, a new diffusion-based framework for synthesizing realistic, dexterous hand-object interactions from natural language. Our model employs a temporal two-stage diffusion process, dividing hand-object motion generation into grasping and interaction stages to enhance generalization to various object shapes and textual prompts. To improve generalization to unseen objects and increase output controllability, we propose grasp guidance, which directs the diffusion model towards a target grasp, seamlessly connecting the grasping and interaction stages through a motion imputation mechanism. We demonstrate the practical value of grasp guidance using hand poses extracted from images or grasp synthesis methods. Additionally, we provide detailed textual descriptions for the GRAB dataset, enabling fine-grained text-based control of the model output. Our quantitative and qualitative evaluations show that DiffH2O generates realistic hand-object motions from natural language, generalizes to unseen objects, and significantly outperforms existing methods on a standard benchmark and in perceptual studies.

Event Type

Technical Papers

TimeFriday, 6 December 20243:19pm - 3:31pm JST

LocationHall B7 (1), B Block, Level 7