WaveBlender: Practical Sound-Source Animation in Blended Domains

Session(Do) Make Some Noise

DescriptionSynthesizing plausible sound sources for modern physics-based animation is exceptionally challenging due to complex animated phenomena that form rapidly moving, deforming, and vibrating interfaces which produce acoustic waves within the air domain. Not only must the methods synthesize sounds that are faithful and free of digital artifacts, but, in order to be practical, the methods must also be fast, easy to implement, and support fast parallel hardware. Unfortunately, no current solutions can satisfy these many conflicting constraints.

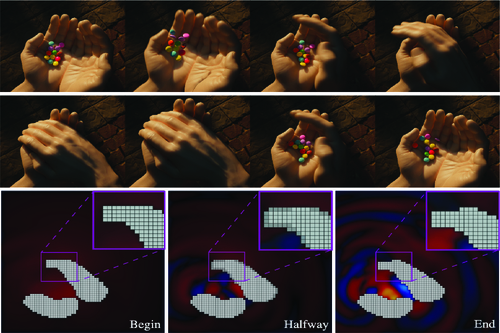

In this paper, we introduce WaveBlender, a simple and fast GPU-accelerated finite-different time-domain (FDTD) acoustic wavesolver for simulating popular physics-based animation sound sources on uniform grids. To resolve continuously moving and deforming solid- or fluid-air interfaces on uniform grids, we derive a novel scheme that can temporally blend between two subsequent finite-difference discretizations. Our 𝛽-blending scheme requires minimal modification of the original FDTD update equations along with only a single new blending parameter, 𝛽, defined at cell centers and modified velocity-level boundary conditions. WaveBlender is able to convincingly synthesize sounds for a variety of physics-based animation sound sources (water, modal, thin shells, kinematic deformers, and re-recording), along with point-like sound sources for tiny rigid bodies. Our solver is robust across different resolutions, achieves good GPU parallelization, and is over an order-of-magnitude faster than prior CPU-based wavesolvers for these animation sound problems.

In this paper, we introduce WaveBlender, a simple and fast GPU-accelerated finite-different time-domain (FDTD) acoustic wavesolver for simulating popular physics-based animation sound sources on uniform grids. To resolve continuously moving and deforming solid- or fluid-air interfaces on uniform grids, we derive a novel scheme that can temporally blend between two subsequent finite-difference discretizations. Our 𝛽-blending scheme requires minimal modification of the original FDTD update equations along with only a single new blending parameter, 𝛽, defined at cell centers and modified velocity-level boundary conditions. WaveBlender is able to convincingly synthesize sounds for a variety of physics-based animation sound sources (water, modal, thin shells, kinematic deformers, and re-recording), along with point-like sound sources for tiny rigid bodies. Our solver is robust across different resolutions, achieves good GPU parallelization, and is over an order-of-magnitude faster than prior CPU-based wavesolvers for these animation sound problems.

Event Type

Technical Papers

TimeFriday, 6 December 20241:28pm - 1:42pm JST

LocationHall B7 (1), B Block, Level 7