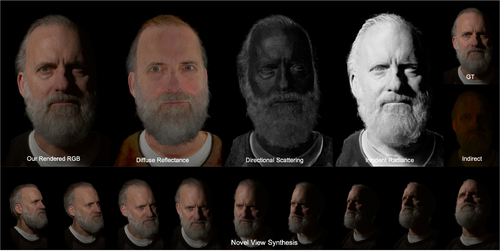

LitNeRF: Intrinsic Radiance Decomposition for High-Quality View Synthesis and Relighting of Faces

DescriptionHigh-fidelity, photorealistic 3D capture of a human face is a long-standing problem in computer graphics -- the complex material of skin, intricate geometry of hair, and fine scale textural details make it challenging. Traditional techniques rely on very large and expensive capture rigs to reconstruct explicit mesh geometry and appearance maps and require complex differentiable path-tracing to achieve photorealistic results. More recent volumetric methods (\eg, NeRFs) have enabled view-synthesis and sometimes relighting by learning an implicit representation of the density and reflectance basis, but suffer from artifacts and blurriness due to the inherent ambiguities in volumetric modeling. These problems are further exacerbated when capturing with few cameras and light sources. We present a novel technique for high-quality capture of a human face for 3D view synthesis and relighting using a sparse, compact capture rig consisting of 15 cameras and 15 lights. Our method combines a volumetric representation of the face reflectance with traditional multi-view stereo based geometry reconstruction. The proxy geometry allows us to anchor the 3D density field to prevent artifacts and guide the disentanglement of intrinsic radiance components of the face appearance such as diffuse and specular reflectance, and incident radiance (shadowing) fields.

Our hybrid representation significantly improves the state-of-the-art quality for arbitrarily dense renders of a face from desired camera viewpoint as well as environmental, directional, and near-field lighting.

Our hybrid representation significantly improves the state-of-the-art quality for arbitrarily dense renders of a face from desired camera viewpoint as well as environmental, directional, and near-field lighting.

Event Type

Technical Papers

TimeTuesday, 12 December 20239:30am - 12:45pm

LocationDarling Harbour Theatre, Level 2 (Convention Centre)

Trade Exhibitor

Experience Hall Exhibitor