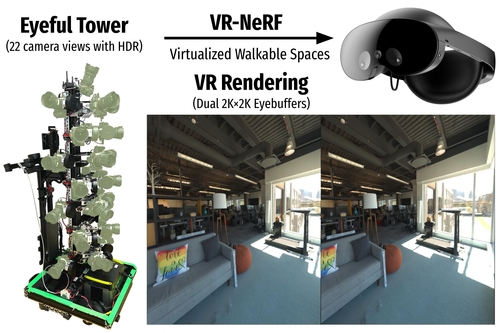

VR-NeRF: High-Fidelity Virtualized Walkable Spaces

SessionTechnoscape

DescriptionWe present an end-to-end system for the high-fidelity capture, model reconstruction and real-time rendering of walkable spaces in virtual reality using neural radiance fields. To this end, we designed and built a custom multi-camera rig to densely capture walkable spaces in high fidelity with multi-view high dynamic range images in unprecedented quality and density. We extend instant neural graphics primitives with a novel perceptual color space for learning accurate HDR appearance, and an efficient mip-mapping mechanism for level-of-detail rendering with anti-aliasing, while carefully optimizing the trade-off between quality and speed. Our multi-GPU renderer enables high-fidelity volume rendering of our neural radiance field model at the full VR resolution of dual 2K×2K at 36 Hz on our custom demo machine. We demonstrate the quality of our results on our challenging high-fidelity datasets, and compare our method and datasets to existing baselines.

Event Type

Technical Communications

Technical Papers

TimeWednesday, 13 December 20235:45pm - 5:55pm

LocationMeeting Room C4.8, Level 4 (Convention Centre)