360-degree Human Video Generation with 4D Diffusion Transformer

SessionHand and Human

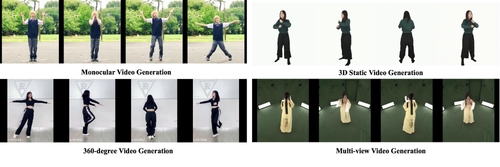

DescriptionWe present a novel approach for generating 360-degree high-quality, spatio-temporally coherent human videos from a single image. Our framework combines the strengths of diffusion transformers for capturing global correlations across viewpoints and time, and CNNs for accurate condition injection. The core is a hierarchical 4D transformer architecture that factorizes self-attention across views, time steps, and spatial dimensions, enabling efficient modeling of the 4D space. Precise conditioning is achieved by injecting human identity, camera parameters, and temporal signals into the respective transformers. To train this model, we collect a multi-dimensional dataset spanning images, videos, multi-view data, and limited 4D footage, along with a tailored multi-dimensional training strategy. Our approach overcomes the limitations of previous methods based on generative adversarial networks or vanilla diffusion models, which struggle with complex motions, viewpoint changes, and generalization. Through extensive experiments, we demonstrate our method's ability to synthesize 360-degree realistic, coherent human motion videos, paving the way for advanced multimedia applications in areas such as virtual reality and animation.

Event Type

Technical Papers

TimeFriday, 6 December 20242:45pm - 2:56pm JST

LocationHall B7 (1), B Block, Level 7