OLAT Gaussians for Generic Relightable Appearance Acquisition

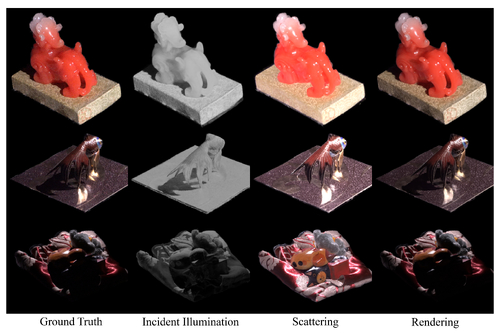

DescriptionOne-light-at-a-time (OLAT) images sample a broader range of object appearance changes than images captured under constant lighting and are superior as input to object relighting. Although existing methods have produced reasonable relighting quality using OLAT images, they utilize surface-like representations, limiting their capacity to model volumetric objects, such as furs. Besides, their rendering process is time-consuming and still far from being used in real-time. To address these issues, we propose OLAT Gaussians to build relightable representations of objects from multi-view OLAT images. We build our pipeline on 3D Gaussian Splatting (3DGS), which achieves real-time high-quality rendering. To augment 3DGS with relighting capability, we assign each Gaussian a learnable feature vector, serving as an index to query the objects’ appearance field. Specifically, we decompose the appearance field into light transport and scattering functions. The former accounts for light transmittance and foreshortening effects, while the latter represents the object’s material properties to scatter light. Rather than using an off-the-shelf physically-based parametric rendering formulation, we model both functions using multi-layer perceptrons (MLPs). This makes our method suitable for various objects, e.g., opaque surfaces, semi-transparent volumes, furs, fabrics, etc. Given a camera view and a point light position, we compute each Gaussian’s color as the product of the light transport value, the scattering value, and the light intensity, and then render the target image through the 3DGS rasterizer. To enhance rendering quality, we further utilize a proxy mesh to provide OLAT Gaussians with normals to improve highlights and visibility cues to improve shadows. Extensive experiments demonstrate that our method produces state-of-the-art rendering quality with significantly more details in texture-rich areas than previous methods. Our method also achieves real-time rendering, allowing users to interactively modify views and lights to get immediate rendering results, which are not available from the offline rendering of previous methods.

Event Type

Technical Papers

TimeTuesday, 3 December 20243:08pm - 3:19pm JST

LocationHall B5 (2), B Block, Level 5