MV2MV: Multi-View Image Translation via View-Consistent Diffusion Models

SessionDiffuse and Conquer

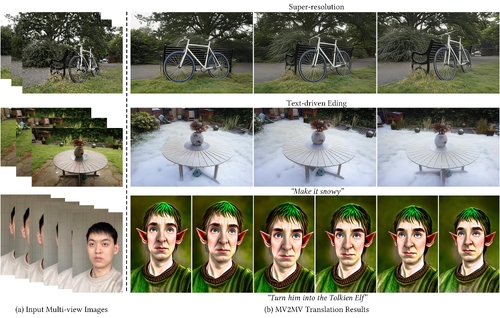

DescriptionImage translation has various applications in computer graphics and computer vision, aiming to transfer images from one domain to another. Thanks to the excellent generation capability of diffusion models, recent single-view image translation methods achieve realistic results. However, directly applying diffusion models for multi-view image translation remains challenging for two major obstacles: the need for paired training data and the limited view consistency. To overcome the obstacles, we present a unified multi-view image to multi-view image translation framework based on diffusion models, called MV2MV. Firstly, we propose a novel self-supervised training strategy that exploits the success of off-the-shelf single-view image translators and the 3D Gaussian Splatting (3DGS) technique to generate pseudo ground truths as supervisory signals, leading to enhanced consistency and fine details. Additionally, we propose a latent multi-view consistency block, which utilizes the latent-3DGS as the underlying 3D representation to facilitate information exchange across multi-view images and inject 3D prior into the diffusion model to enforce consistency. Finally, our approach simultaneously optimizes the diffusion model and 3DGS to achieve a better trade-off between consistency and realism. Extensive experiments across various translation tasks demonstrate that MV2MV outperforms task-specific specialists in both quantitative and qualitative.

Event Type

Technical Papers

TimeThursday, 5 December 20245:28pm - 5:40pm JST

LocationHall B5 (2), B Block, Level 5