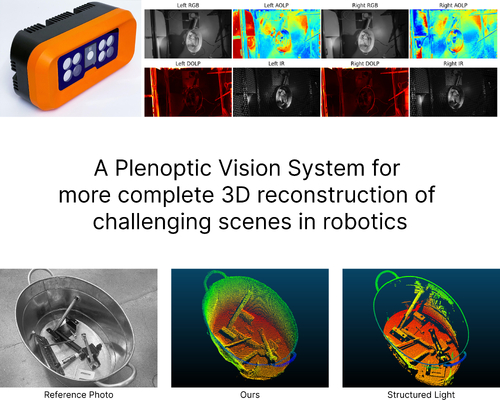

A Plentoptic 3D Vision System

DescriptionWe present a novel multi-camera, multi-modal vision system designed for industrial robotics applications. The system generates high-quality 3D point clouds, with a focus on improving the completeness and reducing hallucinations for collision avoidance across various geometries, materials, and lighting conditions. Our system incorporates several key advancements: (1) a modular and scalable \textbf{Plenoptic Stereo Vision Unit} that captures high-resolution RGB, polarization, and infrared (IR) data for enhanced scene understanding; (2) an \textbf{Auto-Calibration Routine} that enables the seamless addition and automatic registration of multiple stereo units, expanding the system's capabilities; (3) a \textbf{Deep Fusion Stereo Architecture} - a state-of-the-art deep learning architecture trained fully on synthetic data that effectively fuses multi-baseline and multi-modal data for superior reconstruction accuracy. We demonstrate the impact of each design decision through rigorous testing, showing improved performance across varying lighting, geometry, and material challenges. To benchmark our system, we create an extensive industrial-robotics inspired dataset featuring sub-millimeter accurate ground truth 3D reconstructions of scenes with challenging elements such as sunlight, deep bins, transparency, reflective surfaces, and thin objects. Our system surpasses the performance of state-of-the-art high-resolution structured light on this dataset. We also demonstrate generalization to non-robotics polarization datasets. Interactive visualizations and videos are available at \url{https://www.intrinsic.ai/publications/siggraphasia2024}.

Event Type

Technical Papers

TimeTuesday, 3 December 20249:00am - 12:00pm JST

LocationHall C, C Block, Level 4