AR-DAVID: Augmented Reality Display Artifact Video Dataset

SessionColor and Display

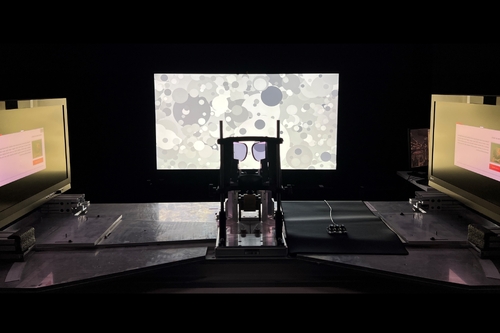

DescriptionThe perception of visual content in optical-see-through augmented reality (AR) devices is affected by the light coming from the environment. This additional light interacts with the content in a non-trivial manner because of the illusion of transparency, different focal depths, and motion parallax.

To investigate the impact of environment light, we created the first video-quality dataset targeted toward augmented reality (AR) displays. The goal was to capture the effect of AR display artifacts (such as blur, or color fringes) on video quality in the presence of a background. Our dataset consists of 6 scenes, each affected by one of 6 distortions at two strength levels, seen against one of 3 background patterns shown at 2 luminance levels: 432 conditions in total. Our dataset shows that the environment light has a much smaller masking effect than expected. Further, we show that this effect cannot be explained by compositing of the AR-content with the background using optical blending simulations. As a consequence, we demonstrate that existing video quality metrics do a poor job of predicting the perceived magnitude of degradation in AR displays, prompting the need for further research.

To investigate the impact of environment light, we created the first video-quality dataset targeted toward augmented reality (AR) displays. The goal was to capture the effect of AR display artifacts (such as blur, or color fringes) on video quality in the presence of a background. Our dataset consists of 6 scenes, each affected by one of 6 distortions at two strength levels, seen against one of 3 background patterns shown at 2 luminance levels: 432 conditions in total. Our dataset shows that the environment light has a much smaller masking effect than expected. Further, we show that this effect cannot be explained by compositing of the AR-content with the background using optical blending simulations. As a consequence, we demonstrate that existing video quality metrics do a poor job of predicting the perceived magnitude of degradation in AR displays, prompting the need for further research.

Event Type

Technical Papers

TimeTuesday, 3 December 20245:16pm - 5:28pm JST

LocationHall B7 (1), B Block, Level 7