Correlation-aware Encoder-Decoder with Adapters for SVBRDF Acquisition

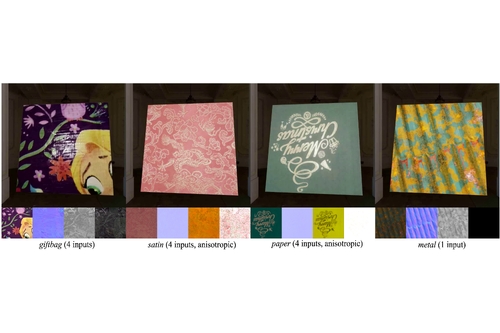

DescriptionCapturing materials from the real world avoids laborious manual material authoring. However, recovering high-fidelity Spatially Varying Bidirectional Reflectance Distribution Function (SVBRDF) maps from a few captured images is challenging due to its ill-posed nature. Existing approaches have made extensive efforts to alleviate this ambiguity issue by leveraging generative models with latent space optimization or extracting features with variant encoder-decoders. Albeit the rendered images at input views can match input images, the problematic decomposition among maps leads to significant differences when rendered under novel views/lighting. We observe that for human eyes, besides individual images, the correlation (or the highlights variation) among input images also serves as an important hint to recognize the materials of objects. Hence, our key insight is to explicitly model this correlation in the SVBRDF acquisition network. To this end, we propose a correlation-aware encoder-decoder network to model the correlation features among the input images via a graph convolutional network by treating channel features from each image as a graph node. This way, the ambiguity among the maps has been reduced significantly. However, several SVBRDF maps still tend to be over-smooth, leading to a mismatch in the novel-view rendering. The main reason is the uneven update of different maps caused by a single decoder for map interpretation. To address this issue, we further design an adapter-equipped decoder consisting of a main decoder and four tiny per-map adapters, where adapters are employed for individual maps interpretation, together with fine-tuning, to enhance flexibility. As a result, our framework allows the optimization of the latent space with the input image feature embeddings as the initial latent vector and the fine-tuning of per-map adapters. Consequently, our method can outperform existing approaches both visually and quantitatively on synthetic and real data.

Event Type

Technical Papers

TimeTuesday, 3 December 20249:00am - 12:00pm JST

LocationHall C, C Block, Level 4