Neural Differential Appearance Equations

SessionDifferentiable Rendering

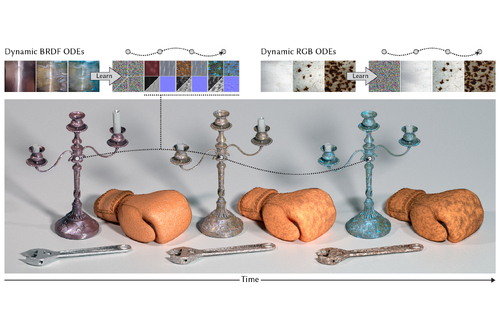

DescriptionWe propose a method to reproduce dynamic appearance textures with space-stationary but time-varying visual statistics.

While most previous work decomposes dynamic textures into static appearance and motion, we focus on dynamic appearance that results not from motion but variations of fundamental properties, such as rusting, decaying, melting, and weathering.

To this end, we adopt the neural ordinary differential equation (ODE) to learn the underlying dynamics of appearance from a target exemplar.

We simulate the ODE in two phases.

At the ``warm-up'' phase, the ODE diffuses a random noise to an initial state.

We then constrain the further evolution of this ODE to replicate the evolution of visual feature statistics in the exemplar during the generation phase.

The particular innovation of this work is the neural ODE achieving both denoising and evolution for dynamics synthesis, with a proposed temporal training scheme.

We study both relightable (BRDF) and non-relightable (RGB) appearance models.

For both we introduce new pilot datasets, allowing, for the first time, to study such phenomena:

For RGB we provide 22 dynamic textures acquired from free online sources;

For BRDF, we further acquire a dataset of 21 flash-lit videos of time-varying materials, enabled by a simple-to-construct setup.

Our experiments show that our method consistently yields realistic and coherent results, whereas prior works falter under pronounced temporal appearance variations.

A user study confirms our approach is preferred to previous work for such exemplars.

While most previous work decomposes dynamic textures into static appearance and motion, we focus on dynamic appearance that results not from motion but variations of fundamental properties, such as rusting, decaying, melting, and weathering.

To this end, we adopt the neural ordinary differential equation (ODE) to learn the underlying dynamics of appearance from a target exemplar.

We simulate the ODE in two phases.

At the ``warm-up'' phase, the ODE diffuses a random noise to an initial state.

We then constrain the further evolution of this ODE to replicate the evolution of visual feature statistics in the exemplar during the generation phase.

The particular innovation of this work is the neural ODE achieving both denoising and evolution for dynamics synthesis, with a proposed temporal training scheme.

We study both relightable (BRDF) and non-relightable (RGB) appearance models.

For both we introduce new pilot datasets, allowing, for the first time, to study such phenomena:

For RGB we provide 22 dynamic textures acquired from free online sources;

For BRDF, we further acquire a dataset of 21 flash-lit videos of time-varying materials, enabled by a simple-to-construct setup.

Our experiments show that our method consistently yields realistic and coherent results, whereas prior works falter under pronounced temporal appearance variations.

A user study confirms our approach is preferred to previous work for such exemplars.

Event Type

Technical Papers

TimeFriday, 6 December 20249:23am - 9:34am JST

LocationHall B5 (2), B Block, Level 5