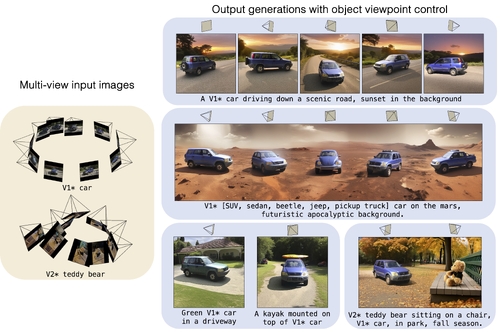

Customizing Text-to-Image Diffusion with Object Viewpoint Control

DescriptionModel customization introduces new concepts to existing text-to-image models, enabling the generation of these new concepts/objects in novel contexts.

However, such methods lack accurate camera view control with respect to the new object, and users must resort to prompt engineering (e.g., adding "top-view'") to achieve coarse view control. In this work, we introduce a new task -- enabling explicit control of the object viewpoint in the customization of text-to-image diffusion models. This allows us to modify the custom object's properties and generate it in various background scenes via text prompts, all while incorporating the object viewpoint as an additional control. This new task presents significant challenges, as one must harmoniously merge a 3D representation from the multi-view images with the 2D pre-trained model. To bridge this gap, we propose to condition the diffusion process on the 3D object features rendered from the target viewpoint. During training, we fine-tune the 3D feature prediction modules to reconstruct the object's appearance and geometry, while reducing overfitting to the input multi-view images. Our method outperforms existing image editing and model customization baselines in preserving the custom object's identity while following the target object viewpoint and the text prompt.

However, such methods lack accurate camera view control with respect to the new object, and users must resort to prompt engineering (e.g., adding "top-view'") to achieve coarse view control. In this work, we introduce a new task -- enabling explicit control of the object viewpoint in the customization of text-to-image diffusion models. This allows us to modify the custom object's properties and generate it in various background scenes via text prompts, all while incorporating the object viewpoint as an additional control. This new task presents significant challenges, as one must harmoniously merge a 3D representation from the multi-view images with the 2D pre-trained model. To bridge this gap, we propose to condition the diffusion process on the 3D object features rendered from the target viewpoint. During training, we fine-tune the 3D feature prediction modules to reconstruct the object's appearance and geometry, while reducing overfitting to the input multi-view images. Our method outperforms existing image editing and model customization baselines in preserving the custom object's identity while following the target object viewpoint and the text prompt.

Event Type

Technical Papers

TimeTuesday, 3 December 20241:46pm - 1:58pm JST

LocationHall B7 (1), B Block, Level 7