Dynamic Neural Radiosity with Multi-grid Decomposition

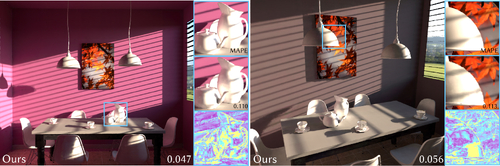

DescriptionPrior approaches to the neural rendering of global illumination typically rely on complex network architectures and training strategies to model the global effects. This often leads to impractically high overheads for both training and inference. The neural radiosity technique marks a significant advancement by injecting the radiometric prior into the training process, allowing for efficient modeling of the global radiance fields using a lightweight network and grid-based representations. However, this method encounters difficulties in modeling dynamic scenes, as the high-dimensional feature space quickly becomes unmanageable as the number of varying scene parameters grows. In this work, we extend neural radiosity for variable scenes through a novel neural decomposition method. To achieve this, we first parameterize the animated scene with an explicit vector $\mathbf{v}$, which conditions a high-dimensional radiance field $L_{\theta}$. We then develop a practical representation for $L_{\theta}$ by decomposing the high-dimensional feature grid into 3D grids, 2D feature planes, and lightweight MLPs. This strategy effectively models the correlation between 3D spatial features and dynamic scene variables, while maintaining a practical memory and computational cost. Experimental results show that our method facilitates efficient dynamic global illumination rendering with practical runtime performance, outperforming previous state-of-the-art techniques with both reduced training and inference costs.

Event Type

Technical Papers

TimeTuesday, 3 December 20249:00am - 12:00pm JST

LocationHall C, C Block, Level 4